In the world of large language models (LLMs), few topics generate more intrigue—and complexity—than memory. While we’ve seen astonishing leaps in capabilities from GPT-3 to GPT-4o and beyond, one crucial bottleneck remains: long-term memory.

Today’s LLMs are incredibly good at reasoning over the contents of their prompt. But what happens when that prompt disappears? How can a model “remember” user preferences, conversations, or newly learned facts weeks later?

The Difference Between Short-Term and Long-Term Memory

Let’s start by breaking down the two core types of memory in LLMs:

- Short-Term Memory (STM): This is the context window—the stream of tokens (text) the model can see at any given time. For GPT-4o, that window is up to 128,000 tokens. While large, it’s still fleeting and resets with every new session.

- Long-Term Memory (LTM): This is the ability to retain information across time, beyond the current session, and reuse it intelligently.

Unlike humans, LLMs don’t inherently “remember” anything once a conversation ends. True long-term memory requires augmentation.

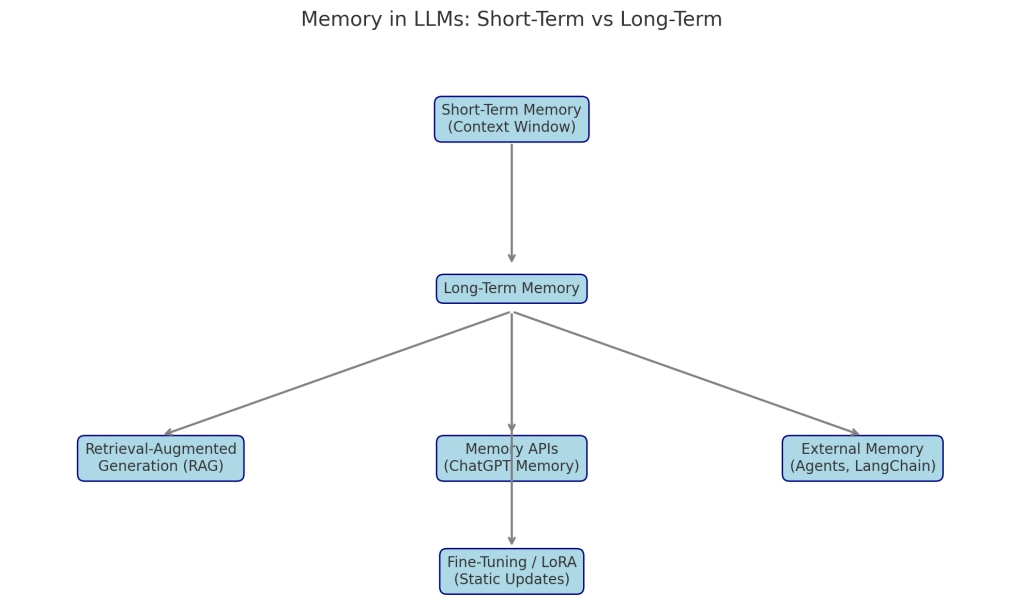

Memory in LLMs: Conceptual Diagram

To understand how LLMs are evolving toward long-term memory, let’s look at the structure:

Figure 1: Short-term vs. long-term memory strategies in LLMs

At the top, we have short-term memory—the context window. This is where all real-time reasoning and comprehension happens.

Beneath that sits long-term memory, which can be powered by different technical strategies, including:

- Retrieval-Augmented Generation (RAG)

- Memory APIs (like ChatGPT Memory)

- External Agents (e.g., LangChain, MemGPT)

- Fine-Tuning or LoRA (Low-Rank Adaptation)

Each method tries to bridge the gap between stateless prompts and persistent understanding.

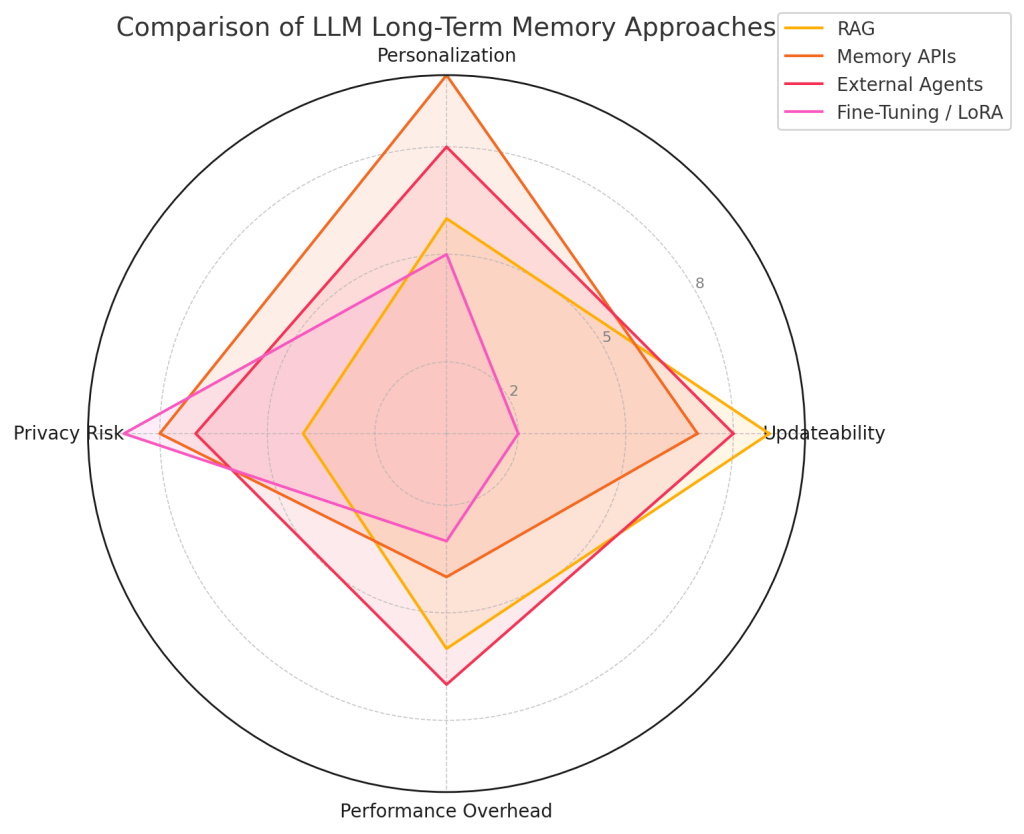

Comparing Long-Term Memory Solutions

Here’s where it gets interesting. Each approach has trade-offs across four key dimensions:

- Updateability – How easily can memory be changed?

- Personalization – How well does it tailor to individual users?

- Privacy Risk – Is sensitive information being stored or exposed?

- Performance Overhead – Does it slow things down?

Figure 2: Radar comparison of long-term memory strategies in LLMs

Summary of Each Approach

| Memory Type | Strengths | Weaknesses |

|---|---|---|

| RAG | Dynamic, scalable, easy to implement | Personalization is limited, retrieval quality varies |

| Memory APIs | Seamless UX, user-controlled memory | High privacy concerns, still evolving |

| External Agents | Modular, composable, persistent | Can be slow, hard to manage securely |

| Fine-Tuning / LoRA | Precise recall, baked-in knowledge | Costly to update, memory becomes static, risky with PII |

The Road Ahead: Human-Inspired Memory?

Just like humans separate memory into episodic (experiences), semantic (facts), and procedural (skills), future LLMs may do the same. We might see:

- Layered memory architectures that mimic human brain structures

- Self-updating LLMs that autonomously refine their internal knowledge

- User-governed memory systems with options to “remember this” or “forget that”

Some bleeding-edge prototypes (e.g., MemGPT or Anthropic’s Claude 3 Opus) are experimenting with this already. But the key will be balancing personalization, privacy, and control.

Conclusion

Memory is the next great leap for language models. While today’s models rely heavily on short-term context, the LLMs of tomorrow will be capable of rich, personalized, and persistent understanding—more like intelligent assistants than stateless chatbots.

And with that evolution, the questions won’t just be technical. They’ll be ethical, regulatory, and human:

- What should models remember?

- Who decides?

- How do we ensure privacy and consent?

We’re not far from finding out.

Want to go deeper? Follow the blog or reach out for future posts exploring real-world use cases, memory agents, and code examples for production-grade memory architectures.