Machine learning models have transformed industries, from diagnosing diseases to predicting customer behavior. However, building a robust model is just half the battle. The real value comes from understanding what your ML results mean and how to communicate them responsibly. For data scientists, ML engineers, and tech professionals alike, interpreting machine learning results is as crucial as developing the models themselves.

As Cynthia Rudin, a Professor at Duke University, emphasizes, “Interpretability is not just a technical requirement but an ethical one, especially in high-stakes domains like healthcare and criminal justice” (Nature Machine Intelligence). In this post, we will explore the tools, context, and best practices for effectively interpreting ML results.

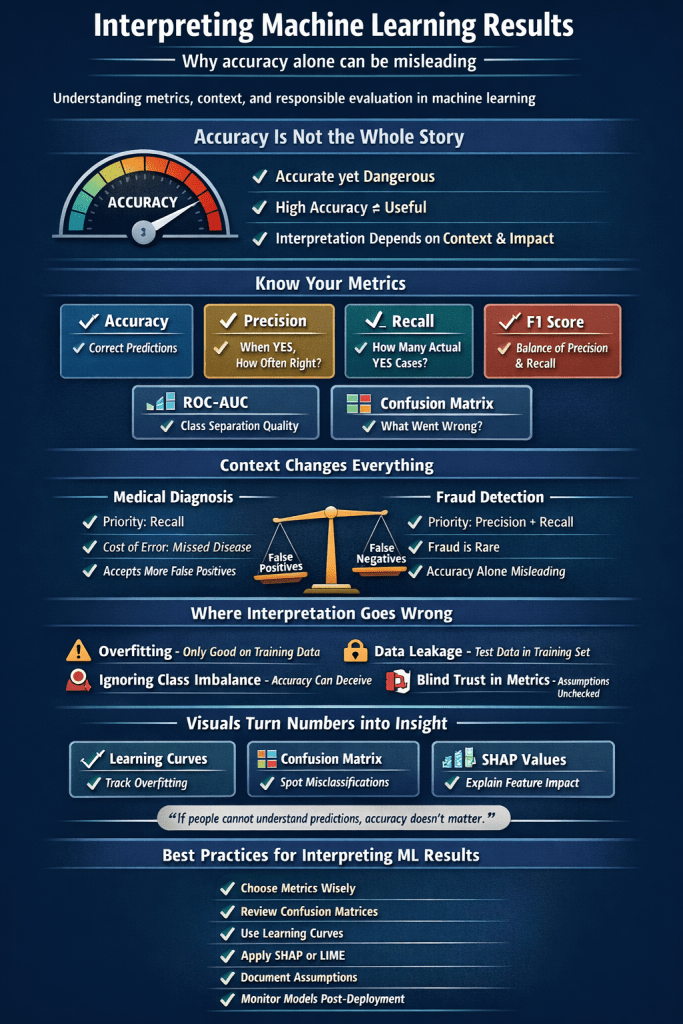

Understanding Evaluation Metrics

Metrics are your window into model behavior, but careful metric selection is essential for meaningful interpretation. Here’s a breakdown of key metrics:

Key Metrics Explained

-

Accuracy: The fraction of correct predictions. Useful mainly for balanced datasets.

-

Precision: Of predicted positives, the ratio that is actually positive.

Analogy: If a cancer test says “positive,” how likely is it that the patient has cancer? -

Recall (Sensitivity): Of all actual positives, how many did the model catch?

Analogy: Of all patients with cancer, how many did the test successfully identify? -

F1-score: The harmonic mean of precision and recall. It’s crucial when the focus is on balancing both metrics, especially when data is skewed.

-

ROC-AUC (Receiver Operating Characteristic – Area Under Curve): Measures the model’s ability to distinguish between classes across all thresholds. A value close to 1 indicates excellent discrimination.

-

Confusion Matrix: This table reveals the true positives, true negatives, false positives, and false negatives, giving a detailed view of model performance.

Practical Example: Medical Testing

Imagine developing a cancer-detection algorithm in which prioritizing recall is imperative. It ensures that as many actual cancer cases as possible are detected (minimizing false negatives). However, focusing solely on recall might increase false positives (healthy individuals identified as having cancer), which could lead to unnecessary stress and interventions. The right metric for evaluation depends heavily on the context and consequences of mistakes in that particular domain.

For more in-depth reading, check the scikit-learn Model Evaluation User Guide.

The Importance of Context: When Numbers Mislead

It’s vital to consider metrics in their specific context. Here are critical insights:

-

Imbalanced Datasets: For fraud detection, where fraud might represent only 1% of transactions, a model predicting “no fraud” across the board can achieve a misleading accuracy of 99% while failing to capture any fraudulent activity. Metrics such as F1 score or ROC AUC are much more informative in such scenarios.

-

Business and Safety Priorities: Consider tumor detection; missing a tumor (a false negative) can be significantly more dangerous than a false alarm (a false positive). Therefore, higher recall might be prioritized over precision in these contexts.

“If people—and, above all, domain experts—cannot understand how predictions are made, it doesn’t matter how accurate the system is.”

— Marco Ribeiro, Creator of LIME (LIME Paper, arXiv).

Common Pitfalls to Watch For

Though careful, practitioners often fall into interpretation traps:

-

Overfitting: Your model performs impressively on training data but poorly on unseen data. Always validate performance with separate test datasets and use learning curves to identify overfitting early.

-

Data Leakage: If information from outside the training set sneaks into your model, metrics might be inflated. Ensure that training and test datasets are kept distinctly separate to avoid this (NIST AI RMF).

-

Ignoring Class Imbalance: As noted earlier, accuracy can be deceptive with imbalanced classes. Report precision, recall, or F1-score for each class.

-

Unexamined Assumptions: Don’t unquestioningly trust predefined metrics or unfamiliar datasets. Always question what the data might reveal.

Visualization: Making Results Intuitive

Visual tools can significantly enhance the interpretability of ML results:

Visualization Techniques

- Learning Curves: Plotting training and validation performance against the size of the training set helps diagnose overfitting or underfitting effectively.

- Confusion Matrix Heatmaps: Visualizing misclassifications aids quick identification of problematic areas.

- Feature Importance and SHAP Values: Most tree-based models can rank feature importance, but SHAP provides a consistent method to show how each feature contributes to each prediction. This can help clarify complex models and ensure stakeholder understanding (SHAP Documentation).

Example: Fraud Detection Dashboard

Consider a banking fraud detection dashboard that displays:

- A ROC curve showcasing improved discrimination capabilities.

- A confusion matrix with a low count of false negatives.

- SHAP summary plots indicating the most critical features—such as transaction size or geographical location—that contribute to fraud detection.

The Role of Domain Knowledge

Domain expertise is essential for accurately interpreting ML results. Why?

-

Feature Relevance: A statistically significant feature may not have real-world relevance. Understanding contextual meaning can clarify why certain variables matter.

-

Cost-Benefit Judgments: Stakeholders can assess the trade-offs for errors better, especially in regulated fields requiring strict oversight.

-

Regulatory Considerations: Industries like healthcare and finance may mandate explanations for model predictions, making interpretability essential.

The NIST AI Risk Management Framework and ISO/IEC 24029-1:2021 emphasize the importance of thorough documentation and involving diverse stakeholders in interpretations.

Best Practices: A Checklist for Responsible Interpretation

Are you ready to put interpretation at the center of your ML practice? Here’s a checklist to guide your approach:

- Select metrics based on your business and data context. Use F1-score or ROC-AUC over accuracy for imbalanced datasets.

- Always examine your confusion matrix. It’s essential for understanding the specifics behind your model’s performance.

- Leverage learning curves for early detection of overfitting or underfitting.

- Employ model-agnostic interpretability tools (SHAP or LIME) and share insights with stakeholders.

- Document all assumptions, testing procedures, and potential sources of bias. This transparency not only aids understanding but supports compliance.

- Engage domain experts in the validation process. Their input can clarify decisions and expose hidden issues.

- Monitor deployments to identify any model drift over time.

For additional resources, visit:

Conclusion

The journey of interpreting machine learning results is not merely an afterthought; it’s critical for responsible implementation and real-world impact. By selecting appropriate metrics, understanding contextual nuances, navigating pitfalls, visualizing effectively, and leveraging domain expertise, you can transform raw model outputs into actionable insights.

As you build your ML models, remember: the finish line is understanding, not just holding a number, but knowing what it signifies in the broader context.

Leave a comment