In a continuation of capturing lessons learned while getting my Master’s in Data Science from Boston University, I wanted to focus on how to create a real world project that is repeatable. Most machine learning projects don’t fail because the model is bad. They fail because the project can’t be reproduced, automated, or safely evolved once the initial experiment is complete. That failure mode is rarely about algorithms, it’s about structure.

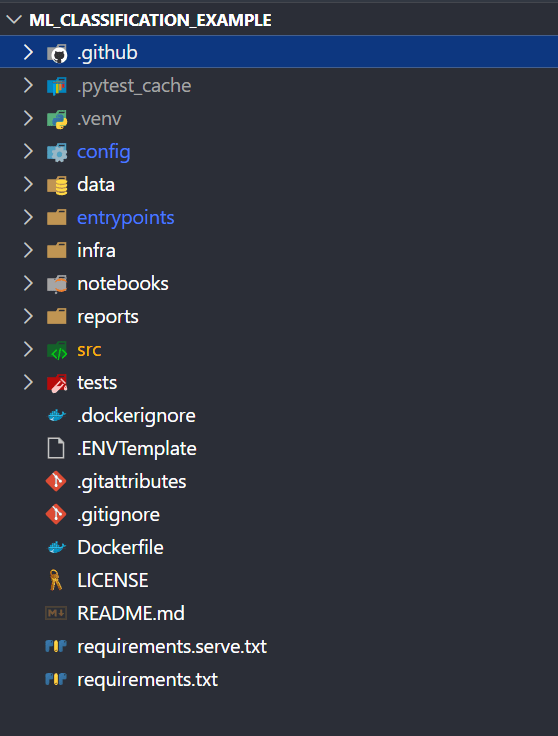

The repository at ml_classification_example, is a useful example of how a machine learning project can be organized as a system, not just a collection of experiments. Rather than focusing on model architecture or performance metrics, this post looks at how the structure of the repository itself enables MLOps practices such as reproducibility, automation, and long-term maintainability. It is based on a dataset that I worked with in my Master’s program.

Structure Is the First MLOps Decision

MLOps is often introduced through tooling, pipelines, model registries, experiment tracking, but those tools only work if the underlying project is structured in a way that supports them. A repository that mixes notebooks, scripts, data files, and trained models in a single directory is difficult to automate and nearly impossible to scale.

This repository takes a different approach. From the top level, it clearly separates data, source code, experiments, and artifacts. That separation is subtle, but it’s foundational. It creates boundaries that make automation possible later without forcing a rewrite.

This mirrors long-standing software engineering principles: clear interfaces, separation of concerns, and explicit execution paths. MLOps simply extends those ideas across the machine learning lifecycle.

The README as an Operational Contract

The README.md functions as more than a project description, it serves as an operational contract. It explains what the project does, how to set it up, and how to execute it without relying on tribal knowledge.

This matters because automation depends on clarity. CI/CD systems, scheduled training jobs, and onboarding workflows all rely on documented entry points. In mature MLOps systems, pipelines are often derived directly from README instructions. Further reading

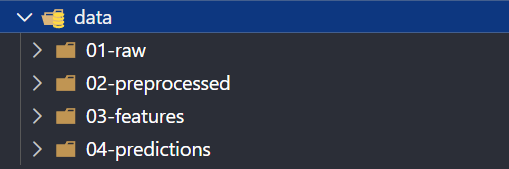

Isolating Data from Code

A key structural decision in this repository is the explicit separation of data from source code. Datasets live in a dedicated data/ directory, rather than being embedded directly into scripts or notebooks.

This separation supports reproducibility and portability. Data can be versioned independently, swapped across environments, or stored remotely without changing the training logic. It also creates a natural integration point for data versioning tools such as DVC or cloud object storage.

This approach aligns with reproducible data science best practices, where data, code, and results are treated as related but independent assets.

Notebooks Without Entanglement

Notebooks play an important role in exploration and analysis, but they become liabilities when they serve as the primary execution mechanism for a project. This repository avoids that trap by isolating notebooks to an exploratory role.

Core logic lives in the source directory rather than inside Jupyter cells. As a result, training and evaluation can run headlessly, without a notebook environment. This is a prerequisite for CI/CD, scheduled retraining, and cloud-based execution.

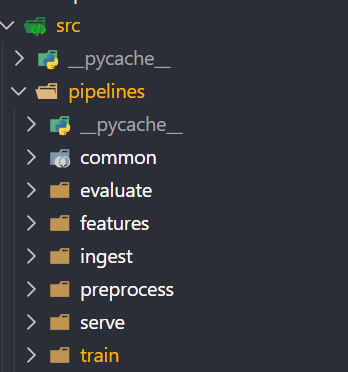

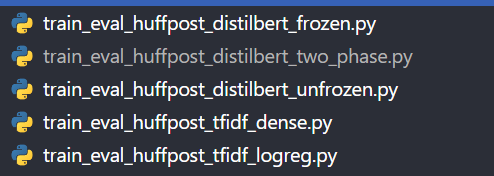

Source Code Designed for Automation

The src/ directory contains modular, reusable components for data loading, model training, evaluation, and shared utilities. This structure makes the project easier to test, refactor, and automate.

From an MLOps perspective, this enables unit testing, static analysis, and pipeline-driven execution. The same code can be reused across local development, CI pipelines, and production workloads without duplication. Microsoft’s MLOps reference architectures emphasize this modular approach as a requirement for scalable ML systems.

Configuration as a First-Class Concept

Behavior in this repository is controlled through configuration rather than hard-coded values. Hyperparameters, paths, and runtime options are externalized, allowing experiments to change without modifying code.

This design supports reproducibility, environment parity, and auditability. It also simplifies automation, since pipelines can run the same code with different configurations depending on the environment.

Configuration-driven design underpins many modern MLOps platforms and workflow engines. Further reading

Tests as a Signal of Intent

Including a tests/ directory signals that the project is designed to evolve. Tests provide safety when refactoring and enable automated validation during pull requests.

In MLOps workflows, tests often become gating mechanisms in CI pipelines, preventing broken training runs from progressing further downstream. While not universal in ML repositories, this practice reflects software engineering maturity.

Scripts as Pipeline Entry Points

Explicit scripts for training and evaluation act as stable entry points for automation. Rather than relying on manual notebook execution, pipelines can invoke a single command and expect deterministic behavior. This design makes it straightforward to integrate the repository with CI/CD systems, schedulers, or managed ML platforms. It is a common pattern in production-grade ML systems.

Designing for the Future, Not Just the Model

What makes this repository effective is not any single file or folder, but the intentional structure as a whole. The project is organized in a way that anticipates collaboration, automation, and long-term evolution. MLOps is not something that gets bolted on later. It is enabled through design decisions made early in the lifecycle. Repository structure is one of those decisions, and one of the most important. Review the final architecture. Review my ealier post on MLOps

Structure is not overhead.

Structure is strategy.