Introduction: The Rise of Hybrid AI Agents

AI agents are rapidly emerging as the next abstraction layer for enterprise artificial intelligence. Rather than interacting with models directly, organizations are beginning to deploy systems that can perceive inputs, reason over context, and take action with limited human intervention. Large language models have accelerated this shift dramatically, enabling agents to plan, converse, and adapt in ways that were not previously possible.

Yet LLM-only agents remain insufficient for enterprise-grade use. They are probabilistic by design, prone to hallucination, and often lack the determinism required for regulated or mission-critical workflows. Custom machine learning models sit at the opposite end of the spectrum. They offer precision, explainability, and repeatability, but struggle with ambiguity, reasoning, and generalization.

The path forward is not a choice between these approaches, but a synthesis of both. Hybrid agents—combining LLM-driven reasoning with domain-specific ML execution, are becoming the dominant architectural pattern for enterprises seeking autonomy without sacrificing control. Recent research highlights that such systems generalize better to new tasks, produce richer contextual outputs, and enable more natural human, AI interaction, particularly in areas such as fraud detection, forecasting, and compliance.

What Defines an AI Agent Today

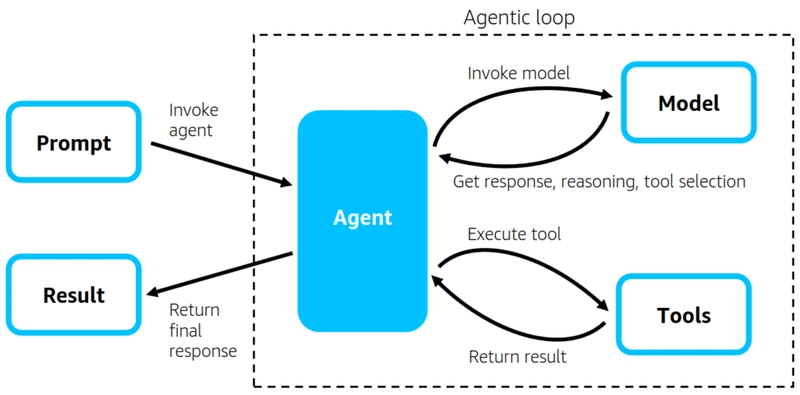

Modern AI agents are no longer glorified chat interfaces. At their core, they are composed systems designed to operate continuously within a broader software ecosystem. They maintain memory that persists across interactions, enabling them to accumulate context over time rather than responding statelessly. They invoke tools dynamically, calling APIs, databases, and machine learning models as part of their reasoning process. Policies and constraints govern how and when actions may be taken, while explicit goals—defined by users or systems—shape the agent’s decision-making.

Most importantly, agents exhibit autonomy. They can decompose tasks into steps, select appropriate tools, and act without constant human supervision. Frameworks such as Azure AI Agents, LangChain, and Semantic Kernel formalize these capabilities, allowing agents to move beyond conversation into execution. In production environments, this shift is already visible. Enterprise platforms are reporting significant reductions in manual workload as agentic systems autonomously resolve large portions of routine requests.

Why Hybridization Matters: LLMs and ML Working Together

Large language models excel at reasoning, planning, and interacting with unstructured data. They can interpret natural language, synthesize information across sources, and adapt to new scenarios with minimal retraining. However, their outputs are inherently probabilistic and difficult to guarantee at scale.

Custom machine learning models fill this gap. Classifiers, regressors, and forecasting models provide deterministic outputs that can be validated, audited, and reproduced. They are tightly aligned with domain data and regulatory requirements, making them indispensable in enterprise settings.

Hybridization allows each approach to operate where it performs best. In fraud detection, an LLM may analyze contextual signals across transactions and user behavior, while a trained ML model makes the final risk determination. In forecasting, LLMs can reason over macro signals and qualitative inputs, while time-series models produce numerical predictions. In document processing or visual inspection, LLMs extract semantic meaning, and ML models validate classifications or detect anomalies. Empirical studies increasingly show that these combined systems significantly reduce hallucinations while improving reliability and decision quality.

Architectural Patterns for Hybrid Agents

Several architectural patterns have emerged as enterprises experiment with hybrid agents. In LLM-first orchestration, the language model acts as the planner, interpreting user intent and delegating tasks to ML tools as needed. This approach works well for dynamic, knowledge-intensive workflows where flexibility is paramount.

In contrast, model-first orchestration places ML models at the center of execution. Here, deterministic models handle the majority of routine decisions, and the LLM is invoked only when ambiguity or exception handling is required. This pattern is particularly effective for high-volume, low-latency systems.

Parallel evaluation architectures run LLM and ML analysis side by side, comparing or reconciling outputs before a final decision is made. This approach is common in mission-critical domains where robustness outweighs cost considerations. Human-in-the-loop designs introduce validation checkpoints, ensuring that sensitive or regulated decisions receive explicit oversight before execution.

Designing the Agent’s Tooling Layer

The tooling layer is where hybrid agents interface with the rest of the enterprise stack. Custom ML models must be exposed as stable, versioned services, typically through managed endpoints or containerized runtimes. Strict input and output schemas are essential, allowing agent reasoning to remain predictable and verifiable.

Prompt design plays a critical role at this layer. LLMs must be guided explicitly on when and how to invoke tools, rather than improvising responses. Guardrails such as logging, throttling, and access controls ensure that tool usage remains auditable and compliant, even as agents scale across teams and workloads.

Building the Reasoning Layer

At the heart of the agent lies its reasoning layer. Effective agents rely on structured prompts that encourage planning, reflection, and stepwise execution rather than single-shot responses. Policy prompts define operational boundaries, embedding risk management and safety constraints directly into the agent’s behavior.

To further reduce hallucinations, many architectures combine retrieval augmented generation with ML backed decisions. External knowledge sources ground the LLM’s reasoning, while deterministic models anchor outputs in measurable signals. This combination is proving essential for enterprise trust and reliability.

Integrating Custom ML Models

Hybrid agents can consume ML models deployed across a variety of environments. Cloud-hosted endpoints provide scalability and centralized management, while serverless deployments offer cost-efficient burst capacity. Edge and on-device models support real-time inference in latency-sensitive scenarios, and containerized deployments ensure portability across platforms.

Agents commonly interact with classifiers, regressors, embedding models, computer vision systems, and time-series forecasters. Operationally, these integrations must be continuously monitored for latency, cost, drift, and failure modes. Without strong observability, even well-designed hybrid agents can degrade silently over time.

Governance, Observability, and Safety

Enterprise adoption hinges on governance. Every agent action, tool invocation, and model output must be logged and traceable. Monitoring frameworks assess output quality, detect anomalies, and surface performance regressions. Comprehensive audit trails enable compliance teams to reconstruct decisions long after execution, a requirement that becomes non-negotiable in regulated industries.

Looking Ahead: Multi-Model, Multi-Agent Systems

The next evolution of agentic systems will not be single, monolithic agents, but coordinated networks of specialists. Distinct agents, each optimized for planning, execution, validation, or oversight, will collaborate within orchestrated workflows. Research increasingly suggests that these multi-agent systems will define enterprise AI over the next several years, enabling greater scalability and resilience through specialization.

Conclusion

Hybrid AI agents that combine the flexibility of large language models with the precision of custom machine learning represent the future of enterprise autonomy. Successfully deploying these systems requires more than technical integration. It demands disciplined architecture, reproducible execution, and robust governance from day one.

Enterprises that embrace this blueprint, starting with narrow, high-value use cases and scaling deliberately, will be best positioned to unlock the promise of intelligent, autonomous systems while maintaining the control and trust their environments require.

Leave a comment